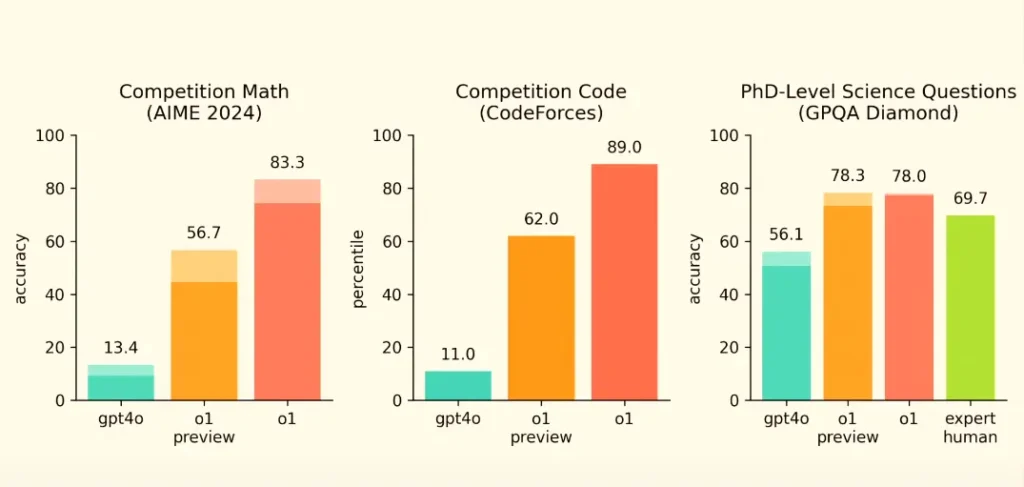

Last week, OpenAI rolled out OpenAI o1, the first ChatGPT model that can reason and think instead of only predicting the next word. The presentation video sent waves through the competitive programming community, especially those seeking to earn college admissions brownie points with competitive programming. OpenAI o1 can solve programming competition problems at the 89th percentile, and it only costs US$20 a month to access.

This is unsettling news, especially as we are right around the corner of a new USACO season. I immediately began to test OpenAI o1 on USACO questions. Through a series of not-very-scientific testing, I was able to arrive at the following results.

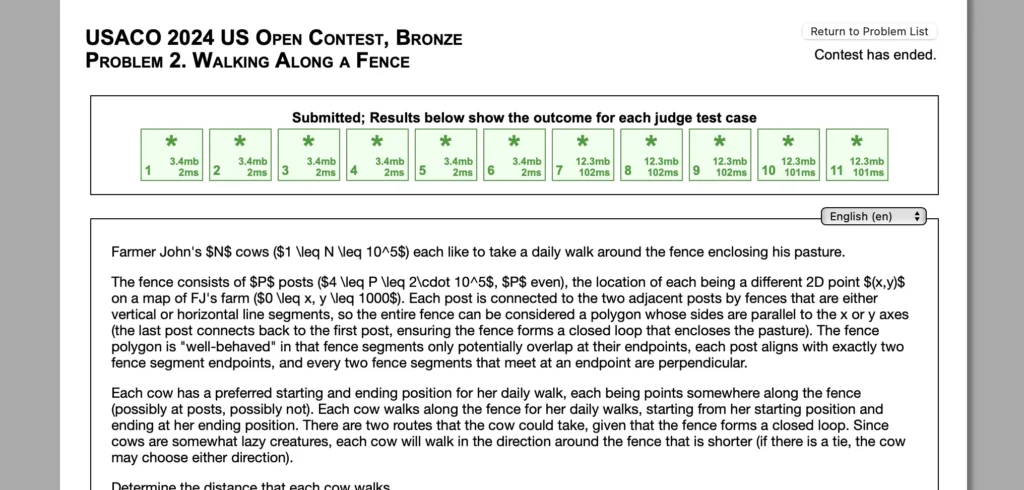

- OpenAI o1 passed USACO Bronze effortlessly. o1 can complete USACO 2024 US Open Bronze questions in one minute, and the generated solution passes all test cases immediately.

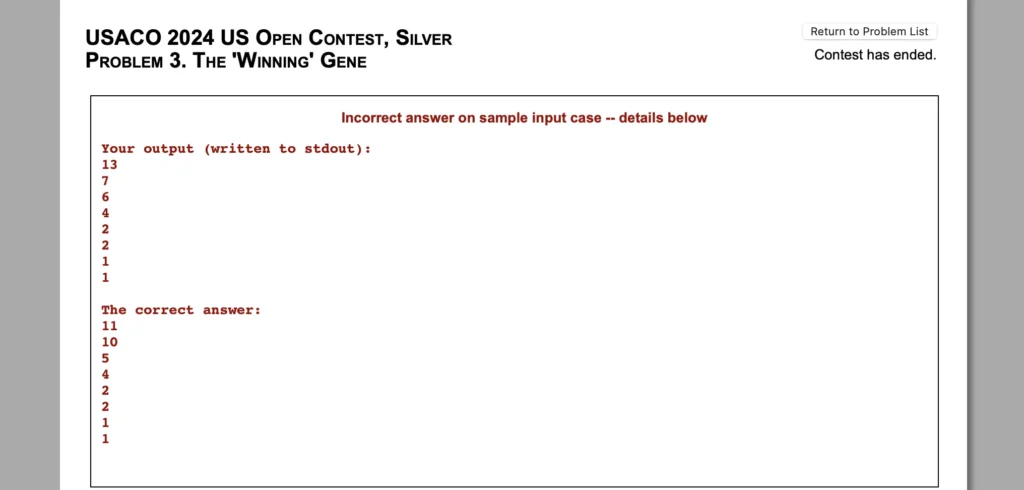

- o1 fails at Silver questions. I tested o1 on multiple USACO Silver questions in the same competition, and o1 was unable to pass the sample test case for any one of them. I requested o1 to fix the implementation with relevant information, but o1 was unable to do so.

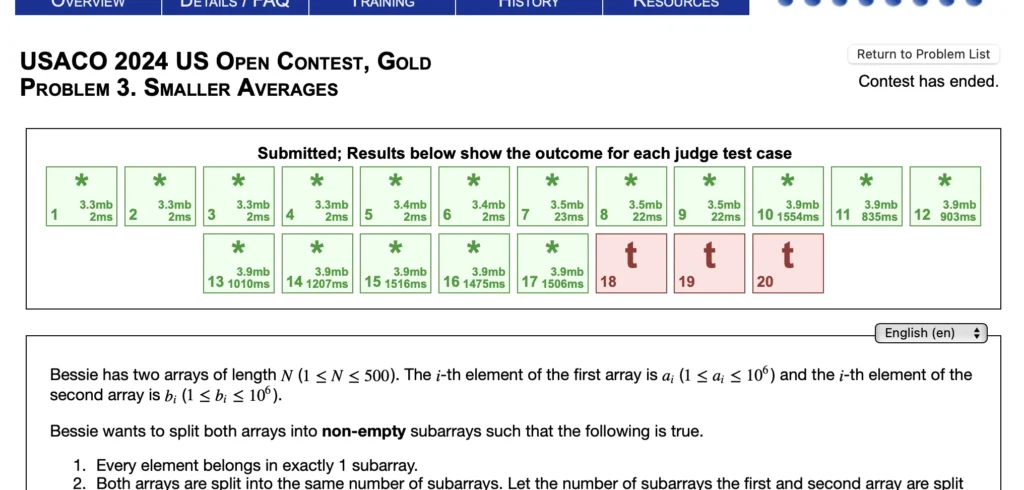

- o1 mostly succeeds at Gold questions. For the question below, o1 initially gets through the first 9 test cases (2 subtasks). After highlighting the time requirements, o1 was able to revise the solution and get through the first 17 test cases (3 subtasks). At this level of performance, o1 can enter Platinum quite easily.

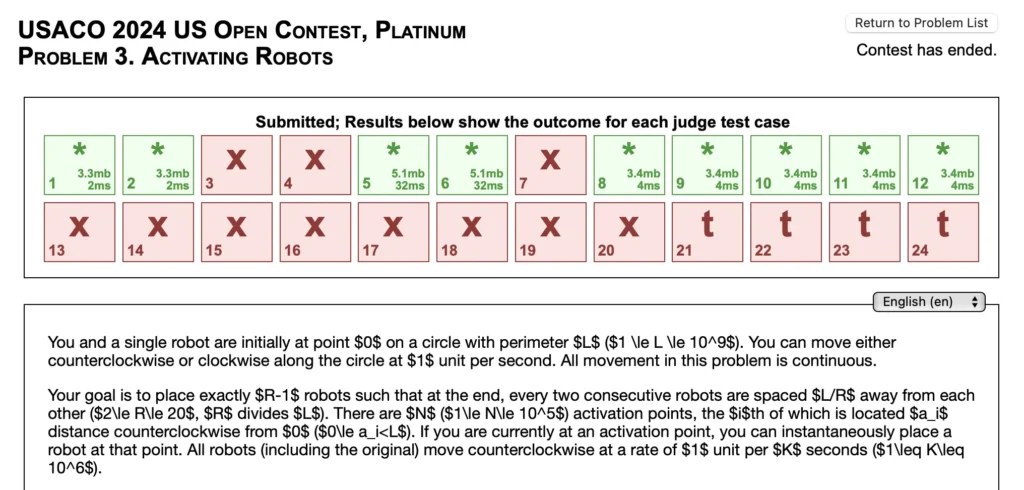

- o1 mostly fails at Platinum questions. It can get a number of test cases correct, but not consistently. In multiple rounds of testing with the same question, as in the image below, the amount of test cases passed range from 4 to 7.

Clearly, this is going to severely hit the integrity of USACO, especially Clearly, this is going to severely hit the integrity of USACO, especially considering o1’s incredible performance in USACO Gold questions. Students targeting undergraduate admissions in the US hardly have any choice other than USACO for competitive programming, and USACO is unable to protect against the use of generative AI, despite the contest rules clearly disallowing such use. Even if O1 is currently unable to solve Silver questions and Platinum questions, newly trained models over the next year will certainly be able to.

Word is beginning to float around that USACO is beginning to die. And yes, it probably is, if you look at it from a college admissions perspective. But “is beginning to die” is an understatement: USACO has been long dead for college admissions. Over the recent years, USACO has been targeted with cheating that it cannot effectively combat because of its unproctored nature. In China, companies charge a hefty price for USACO “tutoring,” which is organized cheating in disguise. Similar activities are rampant throughout the US as well.

USACO has been taking multiple actions against cheating. In the 2023-2024 season, USACO rolled out rules banning the use of generative AI in competitions. USACO also rolled out “certified contests” for its Gold and Platinum levels—students can get a “certified score” if they start their individual contest windows within 15 minutes of the start of the contest. Students are required to have a certified score to promote from Gold to Platinum and to be considered for USACO Camp. These actions, however, cannot defend against the new wave of generative AI. It is nearly impossible to tell AI-generated code from human-written solutions, and USACO hasn’t added proctoring requirements to stop cheating entirely.

It might not be entirely done for, however. Admissions officers are aware of the situation. In my conversation a few months ago with an admission officer at Vanderbilt at a local college fair, I expressed my concerns about the extent of cheating in these competitions. The admissions officer replied,

Going through competitions is one way that we can see how deep your interest runs in a certain area. In fact, there are other things that we gain from simply knowing that you do competitions outside of just the results.

I was also discussing with a private college counselor regarding the situation on USACO during early summer break. He expressed cautious pessimism on the subject, believing that the impact of a high level in USACO is still there, just not as easily proven. He clarified that to truly make the value of USACO in college admissions, students must back their abilities with participation in selective science summer programs, such as the Research Science Institute, and strong letters of recommendation from professors they have worked with. With a combination of these factors, students who are able in computer science can still demonstrate their passion and their abilities.

On a less utilitarian note, USACO still provides a great way to brush up on basic problem-solving, algorithms, and data structure skills. USACO questions are also helpful for developing mathematical maturity. These are fundamental abilities in computer science that are important at any time of one’s career. Therefore, there are still practical benefits to participating in USACO. Even if it’s getting more and more difficult by the day, it may still be worthwhile.

(Dec 8, 2024)